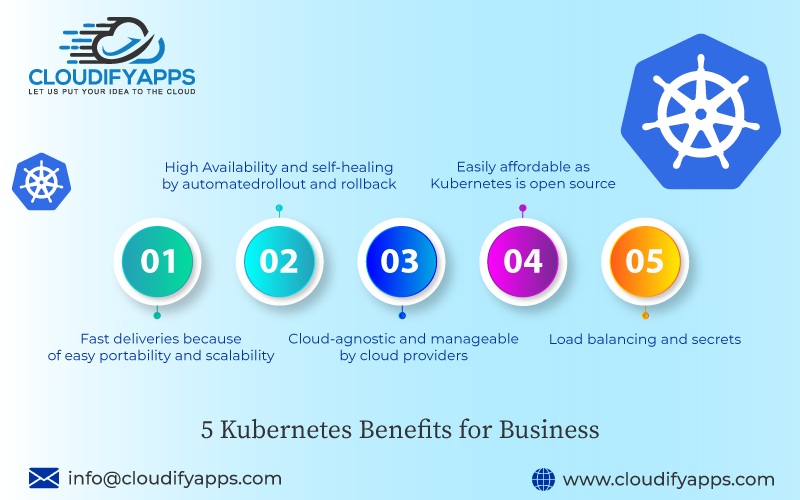

5 Kubernetes Benefits for Business

Introduction:

The process of modern software deployment has been altered dramatically with the introduction of containers. In recent times, containerization has become an alternative to or form of operating system virtualization. By this, applications and dependencies are packaged into a lightweight and portable computing unit capable of running on any infrastructure.

Containerization has helped business organizations by assisting software developers in deploying applications quickly and securely. As this technology advanced quickly, it has also brought forward new challenges that the software developers must overcome. One of the main concerns of using container-based applications is that a large number of containers are now being created and deployed, thereby complicating the entire software development process.

In this situation of the IT world, Kubernetes Applications enter the picture.

What Is Kubernetes?

Kubernetes is an open-source container orchestration system designed to scale, deploy, and manage several containerized applications at once. Google first developed it as a successor to Borg, an internal container orchestration system used by the company. From 2014, the Kubernetes service was open-sourced. It is currently the prized flagship project of the Cloud Native Computing Foundation (CNCF).

Containers that operate the same application are grouped into logical units to act as replicas and distribute workloads across several computing resources for easy management and discovery by Kubernetes.

The flexibility of the Kubernetes Technology grows with the software development team to adapt to the complexity of the applications, making it highly successful in the container orchestration market. It has clearly become the de facto container orchestration platform supported by giant IT firms like Google, IBM, Microsoft, AWS, Cisco, Intel, and Red Hat. Read on to find out why.

What Are The Benefits Of Using Kubernetes For Business?

The fastest-growing open-source software, Kubernetes has carved its niche in the IT scene because of the following benefits it offers the business world:

Fast Deliveries Because Of Easy Portability And Scalability:

Containerization of applications makes them a fully functional software package that is executable anywhere away from the host operating system. They share the Operating System among the software by their relaxed isolation properties. Therefore, containers are already more portable and lightweight than multiple virtual machines. The efficiency of container image is far greater than VM image use.

As the container orchestrator, Kubernetes allows a more straightforward deployment of these containers that makes the whole process even faster. Kubernetes can run or operate containers on any number of public cloud environments, virtual machines, or even on just metals. So it can be deployed anywhere. Its portability helps business organizations to make use of several cloud providers. Kubernetes development can also occur rapidly even if its infrastructure is not re-architected, making the development teams scale faster than before.

A hardware abstraction layer is created for IT developers as Kubernetes offers Platform-as-a-Service (PaaS) delivery. The resources needed by the team of developers can be requested more efficiently with this. It supports easy access to resources to tackle additional workload since the same infrastructure is already shared across all the development teams.

Kubernetes also supports automated bin packaging where the developer provides Kubernetes with a cluster of nodes to run containerization tasks. The development team lets Kubernetes know how much CPU and RAM each container needs. By fitting the best-suited containers onto nodes, the most effective use of resources can be observed.

In a recent and insightful case study published by the famous consumer electronics company Bose, the audio equipment manufacturer notes that their developers needed a tool for quick prototype and deployment services for faster production. The microservices Kubernetes Architecture was chosen, along with Prometheus monitoring, to serve over 3 million connected products since 2017.

The company reports that Kubernetes grew from a brand new conceptual service to a production system in less than fifteen days through several coding and deployment procedures. Hardening and security testing was also done extensively during this period, and by 2018, the company had more than 1250 deployments with 1800 namespace and 340 worker nodes in just one production cluster.

High Availability And Self-healing By Automated Rollout And Rollback:

High availability of Kubernetes Applications refers to how Kubernetes can be set up along with its components and dependencies so that there will not be a single point of failure. While a single master cluster can easily fail, multiple nodes are used in a master cluster with access to the same worker nodes.

Kubernetes components are usually replicated on three masters. If one of these fails, the other masters help to continue the function of the cluster.

Kubernetes have high availability at two levels – application and infrastructure. Additionally, a highly available Kubernetes cluster can be set up with the help of kubeadm. Making Kubernetes highly available with stacked control plane nodes requires less infrastructure as the etcd members and control plane nodes are co-located. With an external etcd cluster, it requires more infrastructure as the etcd members and control plane nodes are separated.

The Kubernetes service restarts containers that have failed. It also kills unresponsive containers that do not respond during the user-defined health check. The containers are not even advertised to clients until they are ready to serve.

Kubernetes can fix minor units encompassing single or multiple containers. The self-healing Kubernetes ensures the optimal state of the running clusters by detecting pod status and container status. Kubernetes can also replace unhealthy containers.

Two types of probes are carried out by Kubernetes to check each container. The liveness probe checks each container for its running status. On failing the probe, Kubernetes kills the container and creates a new one. The readiness probe will review service request serving capabilities. If the probe is not successful for a container, Kubernetes will do away with the related pod's IP address.

Cloud-agnostic And Manageable By Cloud Providers:

The most critical part of Kubernetes Architecture is the cluster which comprises many virtual or physical machines. Each of these machines serves a particular purpose, either as a master or as a node. Groups of one or more containers (which contain your applications) can be found in each node. The master has to communicate with these nodes for the creation and destruction of containers. At the same time, it tells nodes how to re-route traffic based on new container alignments, facilitating its cloud-agnostic behavior.

The telecommunications giant Nokia works with telecom operators who work on bare metal, virtual machines, or software like VMware Cloud and OpenStack Cloud. Their objective to run the same product on all the different infrastructures of their operators without altering the product itself was fulfilled with Kubernetes, said a report published by the telecom company.

It's no wonder that the container orchestrator has facilitated Nokia's entry into the 5G world.

Kubernetes Technology separates the infrastructure and application layer, resulting in fewer dependencies in the system; this makes it easier to implement new features in the app layer. Software development teams can examine the same binary artifact independently without considering the target execution environment. Workloads can be moved without completely redesigning the infrastructure. Errors are thus identified in early testing phases, and there is no need to run the same test on different infrastructures.

Companies like Rancher, Cloud Foundry, and Kublr provide tools to help and manage the Kubernetes cluster. Currently, Kubernetes is running on Amazon Web Services (AWS), Microsoft Azure, and the Google Cloud Program (GCP). Besides being cloud-agnostic, major cloud providers offer a number of Kubernetes-as-a-service offerings.

Platforms like Amazon EKS, Google Cloud Kubernetes Engine, Red Hat OpenShift, IBM Cloud Kubernetes Service, and Azure Kubernetes Service provide complete Kubernetes platform management so that the developers can focus on some other important activities.

Easily Affordable As Kubernetes Is Open Source

Containers are also very cost-efficient as they are so lightweight that they take up less CPU storage and memory in order to function. Multiple containers can be fit into a single server, cutting down on infrastructure expenditures. Because they can run in any environment, the development team has to spend less time on its configuration, and easily affordable tools like CI/CD can be easily implemented.

Moreover, most of the container platforms, like Docker, are open source projects, too. So, microservice applications are bound to be more effective with containerization than virtual machines because of the cost of replication.

The Enterprise Kubernetes offers more efficient and optimized resource utilization than hypervisors or virtual machines since it is open-source software. This opens a door for the software developers to the massive ecosystem of various other open-source programs. Many of these are created particularly as companions to Kubernetes without the need for a copyright license from a proprietary system.

In the report by Bose, the company mentions how the free, open-source Kubernetes and other Cloud Native Computing Foundation (CNCF) landscape products have assisted them in achieving the high scale they have or launch their products on time.

For Huawei, a multinational telecommunications company, with the quick increase of new applications across eight data centers, the cost of virtual machine-based apps became one of the most critical concerns. The agility of their business was affected due to this. Once they shifted around 30% of their development team's applications to Kubernetes, not only did the week-long global deployment cycle become a matter of minutes, but their operating expense was also reduced by 20-30%.

This proved to be very beneficial for their business, and by 2016, they also became a vendor, which led them to allow cross-cloud applications for the customers. It became a kind of market leverage that drew in more customers as Kubernetes made the cross-cloud transition easier.

Load Balancing And Secrets:

Load-balancing is the process by which network traffic is efficiently distributed among several backend services. It is an essential strategy for enhancing scalability and availability. Kubernetes allows load balancing external traffic to pods in various manners.

Each load balancer acts as an intermediary between client devices and backend servers, distributing the incoming to any available server or has less traffic. It is a very systematic distribution of application traffic that is easy to implement at the dispatch level.

Ingress does the most popular and arguably the most flexible load-balancing. An Ingress resource is a set of rules implemented by the controller along with a daemon that applies those rules. The controller comes with a built-in load-balancing feature.

However, the following tools can be used for more effective load-balancing with the Enterprise Kubernetes:

- Kube-proxy

- IP Virtual Server (IPVS)

- L7 Round Robin

- Ring hash

- Maglev

As applications are isolated as containers, it automatically prevents the attack of harmful code from tampering with the other containers or the host system. Moreover, security permissions can also be defined to automatically block unwanted components from entering the containers or make limited communications with resources that are not so necessary.

Kubernetes allows the developer and the user to store all their sensitive information, like passwords, SSH keys, and OAuth tokens. Secret information can now be easily updated and deployed without tinkering with the container image or exposing secrets in the stack configuration.

Conclusion:

Kubernetes as a container orchestrator, does away with centralized control. Unlike a normal orchestrator, Kubernetes does not need a defined workflow. It makes a set of independent control processes to reach the user's desired state from the current state; the workflow pathway is not important.

Kubernetes' ability to carry out rolling updates and adaptability to workplaces are beneficial features. On top of that, it can also self-heal in the case of a pod meltdown.

The most helpful thing about incorporating Kubernetes development in business administration is that the IT team will have years of research by Google and other developers to fall back upon. With 5608 GitHub repositories, the scope is enormous. This history of the software makes it credible enough. It also fixes its bugs on time, with new features being released on the regular.

Popular Tags

Recent Posts

Smart automation for cash-strapped startups

The modern data trinity: How medallion architecture, RAG, and data lakes revolutionize enterprise intelligence

Accelerating AI-Driven Development with Docker’s MCP Catalog & Toolkit

Building Intelligent Agent Teams with Google's ADK: A Developer's Guide

We are at